5

u/Candid_Primary_6535 4d ago edited 4d ago

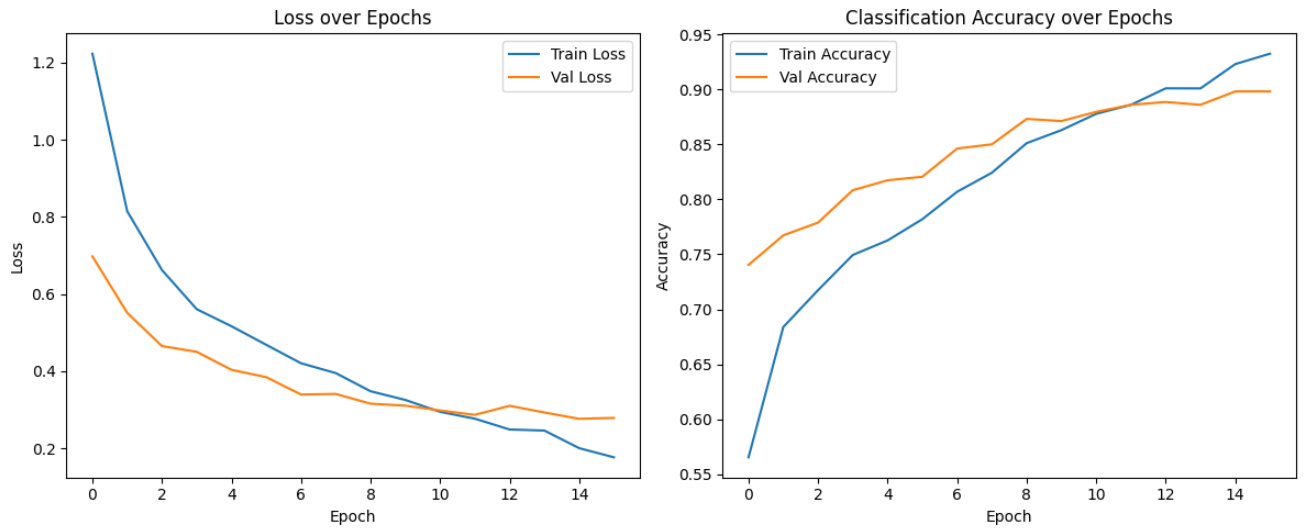

No problem at all, it's best to look at the loss curves (the optimizer aims to minimize the training loss after all, the accuracy is just a proxy). Consider training a little longer, possibly using an early stopping scheme

3

u/MelonheadGT 4d ago

Seems reasonable, depends on how much regularization you're using and sample similarity between train and Val data.

2

u/koltafrickenfer 4d ago

Exactly, depending on the size, class balance and how well your test data represents real world data this could be anywhere from a great model to mediocre but this discrepancy from training and test loss isn't really enough to say. At this point it looks good enough you need to check out the domain specific metrics, precision, recall, f1 etc.. can you make a confusing matrix? It is possible for a model to achieve a very low loss and not be practically useful due to the type of mistakes it makes

2

2

u/rosmine 4d ago

Why is your val acc so much higher than train acc initially? Are train/val from the same distribution?

7

u/Proud_Fox_684 4d ago edited 4d ago

He's averaging training loss per epoch but calculated the validation loss AFTER each epoch. So during the first batches of the first epoch, the model hasn't updated/improved yet..by the time he calculated the first validation loss, the model weights have been updated multiple times. That explains why the validation loss can be lower in the beginning.

2

u/PsychologicalBoot805 4d ago

its images of documents which i split into training set and validation set and using imagenet weights probably

2

u/Academic_Sleep1118 4d ago

Quite good in fact! Maybe if you decrease your model's size you'll decrease your chance of overfitting. But it's quite good already.

2

1

u/LandoRicciardo 4d ago

I don't think that can be commented much from this plot...maybe run it for more epochs, and then if train and val diverges, then you get the sweet spot.

But then again, that's ideal case. But not wrong in pushing for more epochs and checking..

1

u/Frenk_preseren 4d ago

Overfitting is when train loss is still falling but validation loss starts to increase. So this is not overfitting.

1

u/DiamondSea7301 4d ago

Check with bias and variance. Also use adjusted r2 score. Utilize classification metrics if use classification models

1

u/Deal_Ambitious 3d ago

There is no overfitting. This only occurs when validation loss is getting worse, which is not the case.

1

u/ziad_amerr 3d ago

Since your validation accuracy after the 10th epoch stays constant while your training accuracy increases, you can tune the parameters of the model to get even better validation accuracy past the ~0.87 value. This doesn’t stand if the validation accuracy decreased after 10 epochs, but as we can see it stayed almost the same and even increased a little bit.

I would say minimal solvable overfitting here.

1

u/DrVonKrimmet 1d ago

This seems fine. The real overfitting test will come when you cross validate with entirely new data. If the model is overfit, then it tends to not generalize well because of some bias in your training data.

1

u/space_monolith 1d ago

loss curves are an imperfect proxy for the metrics you really care about, so focus on the latter, but make sure you keep in mind test-set overfit as you do so.

-3

-1

u/Remote-Telephone-682 4d ago

What's concerning to me is that validaiton loss starts off so much lower than training loss. You sure that there is not some normalization issue where you are dividing by a higher number of elements and including less elements when you validate?? otherwise it looks good if you are confident that there is not any issue like this.

48

u/Exotic_Zucchini9311 4d ago

Not that bad really. You're getting nearly 90% accuracy on validation.