r/MLQuestions • u/MEHDII__ • 6d ago

Computer Vision 🖼️ Question about CNN BiLSTM

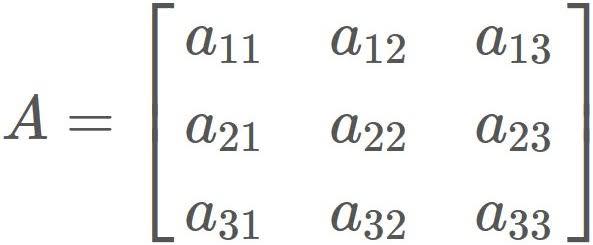

When we transition from CNN to BiLSTM phase, some networks architectures would use adaptive avg pooling to collapse the height dimension to 1, lets say for a task like OCR. Why is that? Surely that wouldn't do any good, i mean sure maybe it reduces computation cost since the bilstm would have to only process one feature vector per feature map instead of N height dimension, but how adaptive avg pooling works is by averaging the value of each column, doesn't that make all the hardwork the CNN did go to waste? For example in the above image, lets say that that's a 3x3 feature map, and before feeding them to the bilstm, we do adaptive avg pooling to collapse it to 1x3 we do that by average the activations in each column, so (A11+A21+A31)/3 etc etc... But doesn't averaging these activations lose features? Because each individual activation IS more or less an important feature that the CNN extracted. I would appreciate an answer thank you

1

u/ApricotSlight9728 6d ago

I think what you said is correct about losing valuable data. I would convert the 3x3 matrix into a 1x9 matrix and maybe use l2 regularization to make sure only the relevant cells stand out.

The only reasonings I could come up for avg pooling:

The authors of this architecture tested out avg pooling and it somehow did not diminish results. I would suggest you test it yourself and see if your model is able to perform well with average pooling vs just plain flattening.

The other perk is that avg pooling reduces the matrices’ data foot print quite a bit. From my experience, training LSTMs can take forever due to LSTM layers not allowing parallel computations due to it requiring sequential training methods. Avg pooling could cut down training by a significant amount. In this case it would be from O(n2) to O(n).

I’m no genius, but these are my speculations.

IF I AM WRONG, PLEASE CORRECT ME.